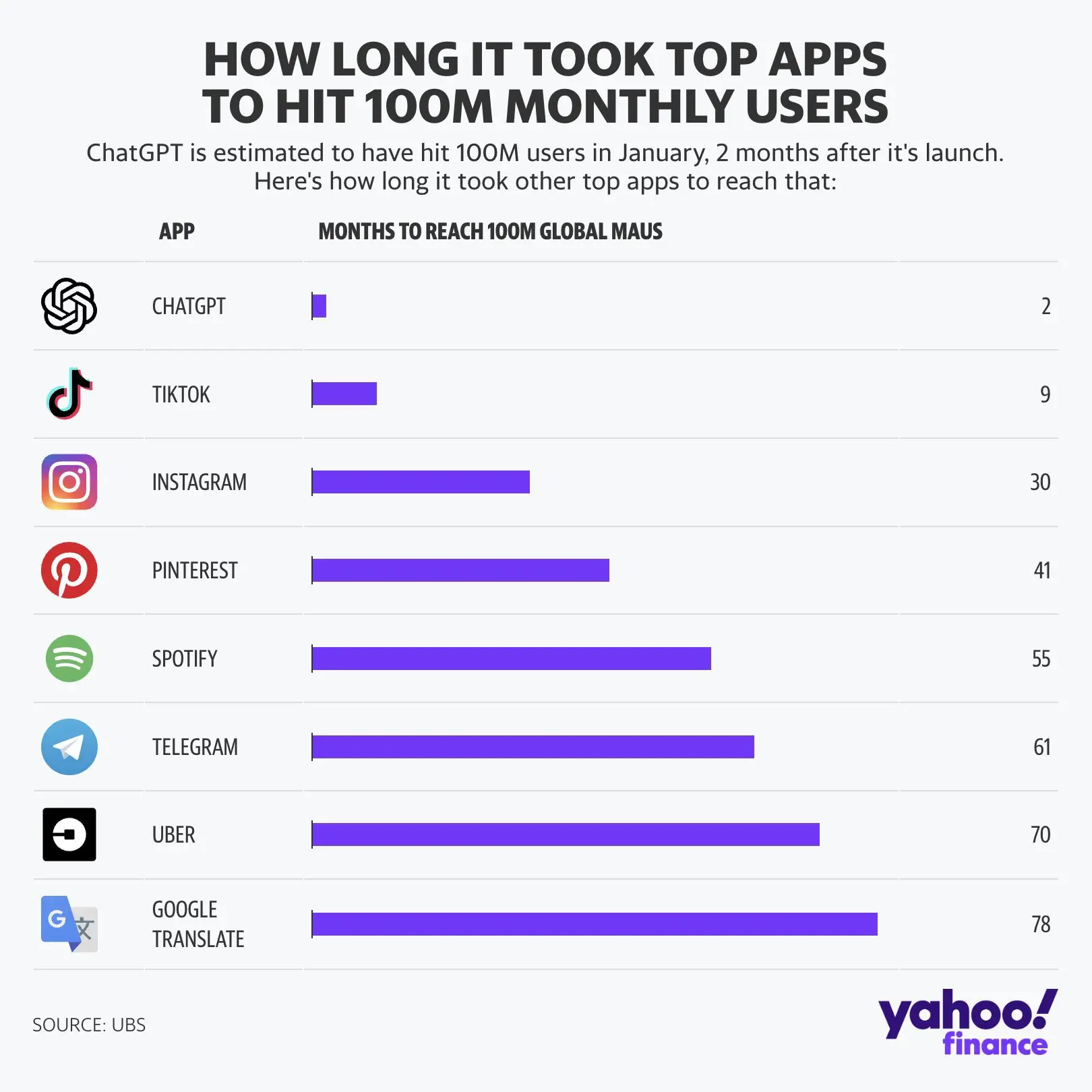

Ever since ChatGPT came out, the tech world has jumped on a new hype train—just like it did before with crypto, NFTs, and the metaverse. This time, I think the hype spread even faster because it was so easy to try—just open a website and start typing. ChatGPT quickly became one of the fastest-growing products ever, reaching 100 million users in 2 months. Like past trends, it also brought a lot of debate and strong opinions. I’ve used ChatGPT and other large language models (LLMs), and I’ve even added them to products at work. But even with all that, I’m still not on the AI hype train. In this post, I’ll explain why.

Creation of the Models

The models behind ChatGPT and others are trained using text from across the internet. The problem is, these companies didn’t ask for permission from the people or websites that created the content. They just crawled the web and copied whatever they could find. While doing that, they also caused a lot of traffic to some websites, which led to higher bandwidth costs for those sites. (How OpenAI’s bot crushed this seven-person company’s website ‘like a DDoS attack’) Big publishers like news websites have already sued OpenAI for this (The New York Times is suing OpenAI and Microsoft for copyright infringement), and some have made deals to license their content (A Content and Product Partnership with Vox Media). But smaller websites and creators didn’t get a choice at all.

Some companies, like Meta, went even further. They didn’t just use web content—they also used pirated books to train their models. (The Unbelievable Scale of AI’s Pirated-Books Problem) If a regular person did this, they’d probably get into serious trouble. But when billion-dollar companies do it, they usually get away with it. And if they do get fined, it’s often such a small amount that it doesn’t even affect their yearly profits.

The way these models are trained is already a big issue. But a bigger problem is that they use all kinds of content from the internet. They do this because they need huge amounts of data to make the models better. That’s why Meta turned to pirated books—they had already used most of what was available online. And this is where things get risky. While most people only visit handful of websites, the internet is full of harmful content. These models are trained on all of it, and that means they can end up repeating or using that harmful content in their answers. (The risks of using ChatGPT to obtain common safety-related information and advice) Since the models often give answers with a lot of confidence, it can be hard—especially for younger people—to tell whether something is right or wrong.

Once the companies collect the data, they start training the models. This isn’t like running a normal website. It requires special data centers with powerful graphic cards built for AI training. These cards use a lot of electricity, which means more energy needs to be produced. That’s why companies like Microsoft have made deals to reopen old power plants. (Three Mile Island nuclear plant will reopen to power Microsoft data centers) Some companies are even building new power plants that run on fossil fuels. (AI could keep us dependent on natural gas for decades to come)

But it’s not just about needing more electricity. These data centers also cause other problems. For example, they can affect the electricity grid and cause small but important changes to the frequency of the power. (AI Needs So Much Power, It’s Making Yours Worse) That can mess with electronic devices in homes near the data centers. Because they use so much electricity, they also produce a lot of heat. To cool everything down, they need large amounts of water, which can create issues for local water supplies. (AI is draining water from areas that need it most) The situation has gotten so bad that companies like Microsoft, which once aimed to become carbon neutral by 2030, might not be able to meet those goals. (Microsoft’s AI obsession is jeopardizing its climate ambitions)

Usage of the Models

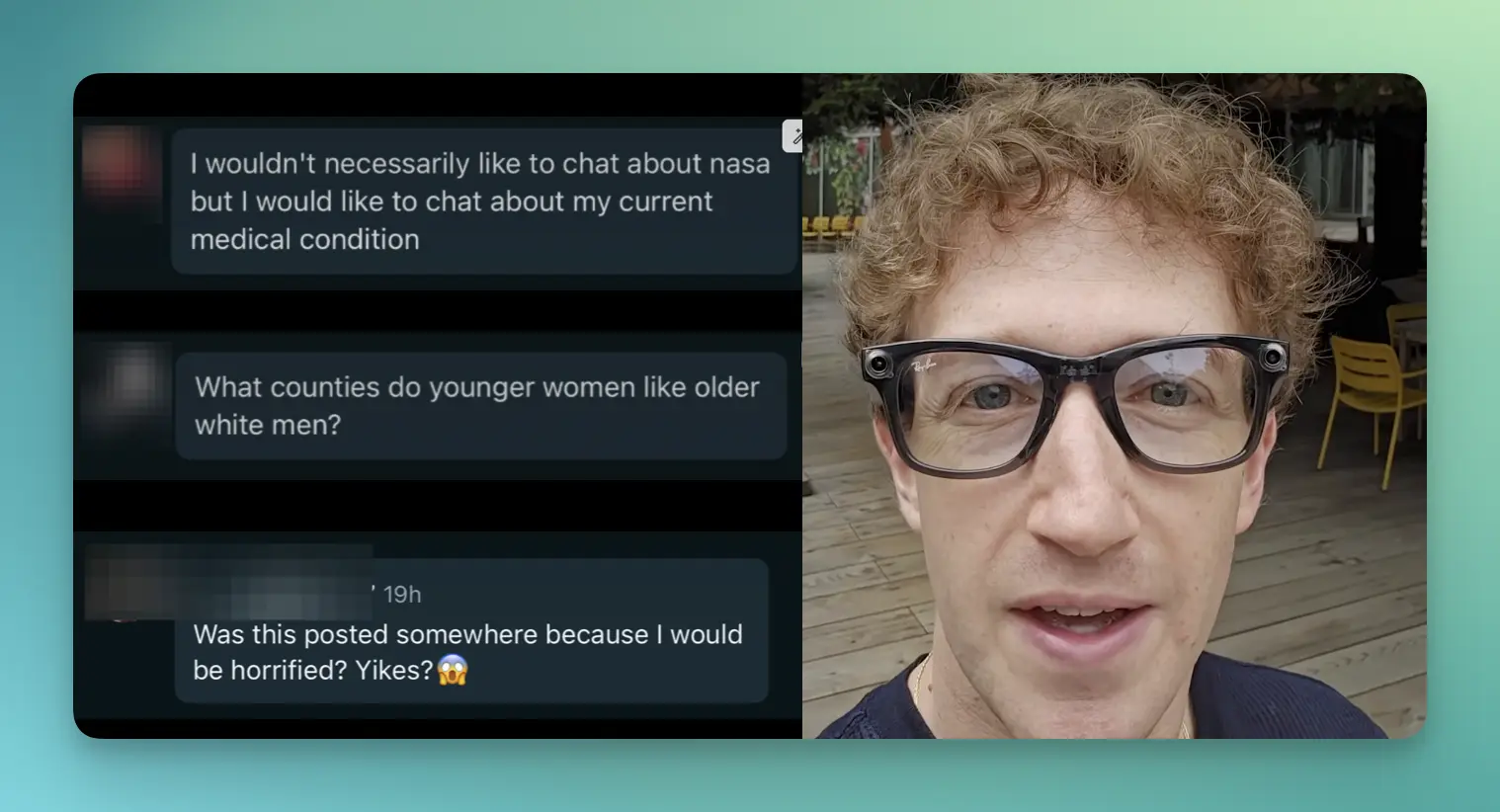

After using huge amounts of data and electricity to train these models, you’d expect something truly useful. But even though companies promote these models like they can solve the world’s biggest problems, the way people actually use them can be disappointing. A lot of students use these tools to do their homework, both in school and university. (26% of students ages 13-17 are using ChatGPT to help with homework, study finds) When teachers notice that homework quality has gone up, they start using these tools too—to grade the homework. (Teachers are using AI to grade essays.) Lawyers have also been caught using AI tools to help with their legal cases. (Law firm restricts AI after ‘significant’ staff use) Many people now use AI for advice on money, relationships, or even health. (AI models miss disease in Black and female patients) And if you look at the leaked chats with Meta AI, you can see how everyday people are really using these tools. (Meta Invents New Way to Humiliate Users With Feed of People’s Chats With AI)

These use cases are often ignored, especially by software developers who push AI tools heavily. As a software developer myself, I can say that many in our field don’t really think about the long-term impact of the tech they build. They’re often focused on interesting problems and high salaries. For developers, it’s easy to test the output of these models by running the code or writing automated tests. If something breaks, you can just undo it. But for others—like students or lawyers—it’s not that simple. A student might get a bad grade (School did nothing wrong when it punished student for using AI, court rules), and a lawyer might embarrass themselves in front of a judge. (Mike Lindell’s lawyers used AI to write brief—judge finds nearly 30 mistakes)

There’s also a growing group of people using these tools to create content just to go viral or make money from ads or affiliate links. This floods social media and websites with low-quality, copy-paste content. (AI Slop) Even newspapers have used these tools to make book lists that include books that don’t even exist. (How an AI-generated summer reading list got published in major newspapers)

Worse, some people use AI tools to replace real social interaction. There are now startups offering AI girlfriends. (AI Tinder already exists: ‘Real people will disappoint you, but not them’) Kids talk to these tools like they’re real friends—some have ended up with serious mental health problems, and in tragic cases, even suicide. (A 14-Year-Old Boy Killed Himself to Get Closer to a Chatbot. He Thought They Were In Love.) People now argue by quoting AI responses instead of doing real research. I’ve had friends who do this—they trust ChatGPT over actual facts. I end up spending time correcting them with real, trusted sources, especially on serious topics like law, taxes, money, and health.

The long-term risks are even bigger. We might raise a generation that doesn’t know how to write essays, can’t read and understand long articles, can’t communicate well, and struggles with real relationships. (Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task) Most people don’t know that these models don’t really “understand” anything—they just predict the next word in a sentence. They’re not intelligent, but they look smart because they talk like us. Companies use words like “thinking” and “understanding” to describe these tools, which makes people believe the models are smarter than they really are. In truth, these models can make things up completely. (What Happens When People Don’t Understand How AI Works)

Many companies now push these tools as replacements for entry-level employees—especially interns and junior roles. But they don’t think about what happens later, when there are no trained juniors to become seniors. Klarna, for example, replaced their customer support with AI (Klarna CEO says the company stopped hiring a year ago because AI ‘can already do all of the jobs’), but later had to go back to using humans. (Klarna Slows AI-Driven Job Cuts With Call for Real People) Because at the end of the day, people want to talk to other people—especially when they need help.

More and more people will depend on AI tools and slowly lose their ability to think for themselves. As a software developer, you might feel like you’re working faster thanks to AI. But your own skills might slowly get worse. And by the time you realize it, you might be out of a job—and not ready for the next one. The scariest part is, you might not even notice it’s happening, because the output at work still looks good.

Money Problem

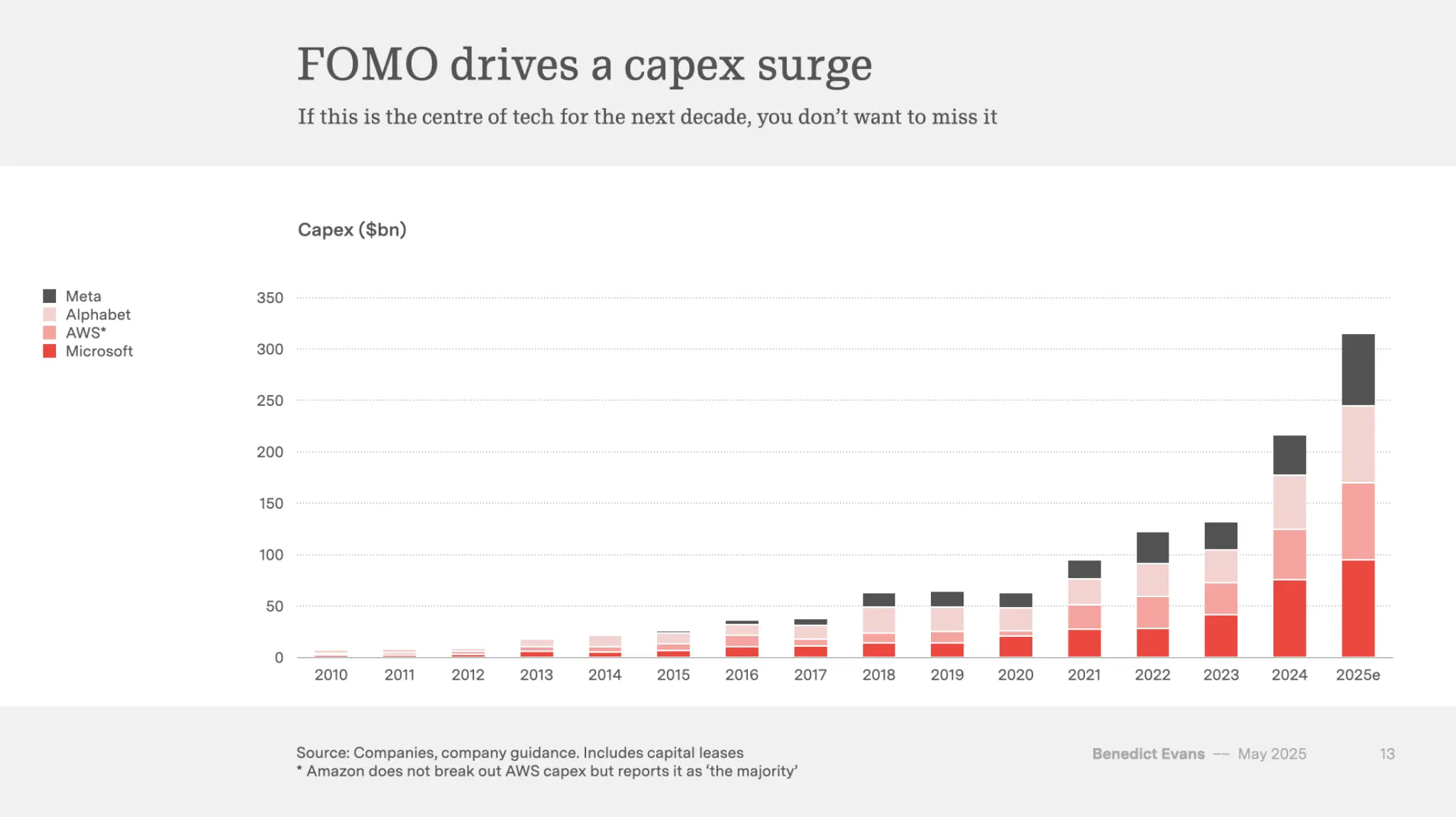

When you see AI everywhere, it’s not by chance—it’s because companies are spending huge amounts of money on it. Big names like Meta, Microsoft, Amazon, and Google are spending tens of billions of dollars every few months just to buy the graphic cards needed to train these models. They’ve spent so much money, but they aren’t making enough in return from the AI tools they offer. (AI’s $600B Question) To make their financial reports look good, they’ve started laying off employees. (The ‘white-collar bloodbath’ is all part of the AI hype machine) At the same time, they say AI is making them more productive, so they don’t need as many workers.

Smaller companies are copying what the big ones are doing. They’re also putting money into AI and claiming they’re getting more work done. But in many office jobs, it’s hard to actually measure productivity in a clear way. (Cannot Measure Productivity) Because of all this pressure, even Apple—usually slow to jump on tech trends—felt the need to quickly release “Apple Intelligence.” But many of the things like Swift Assist and Siri improvements aren’t even available yet, and the parts that were released aren’t being used much. (Apple’s AI isn’t a letdown. AI is the letdown) The hype has now grown so big that both companies and governments are being pushed to use AI, just so the investors who poured billions into it can try to get their money back. (The US intelligence community is embracing generative AI) Meanwhile, there are startups with no real product being bought for billions of dollars—like the one Jony Ive is working on. (OpenAI is buying Jony Ive’s AI hardware company for $6.5 billion) If you look at how these startups are valued, it’s hard to see how they could ever make enough money to justify it. (AI Valuation Multiples 2025)

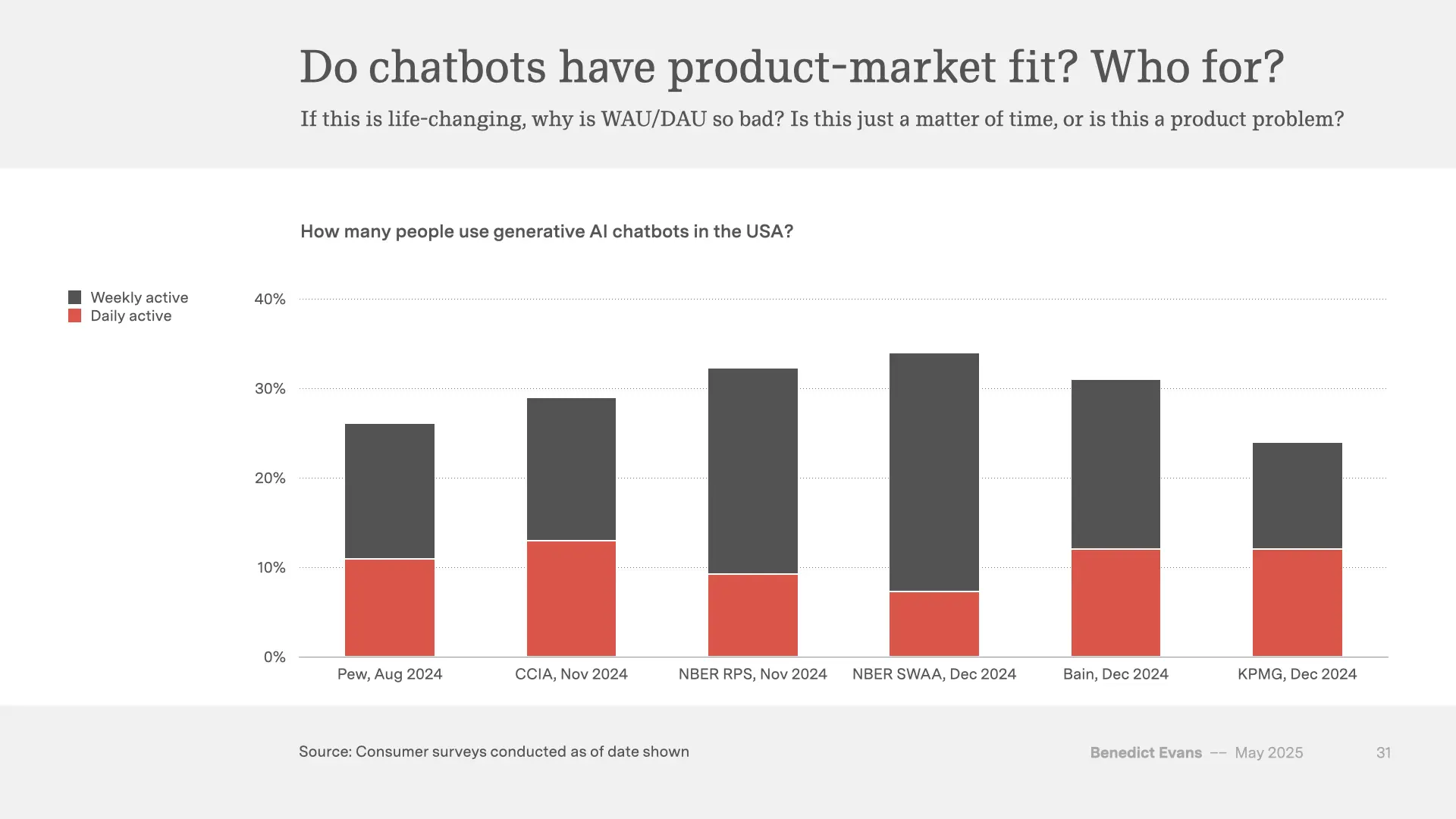

All this leads to a big problem: monetization. ChatGPT has around 500 million weekly active users, but only around 20 million of them actually pay for a subscription. That means the vast majority of people think it’s not worth $20 a month. (OpenAI Is A Systemic Risk To The Tech Industry) Other companies like Canva and Salesforce, which have added AI features to their tools, have also raised their prices. (Canva says its AI features are worth the 300 percent price increase, Salesforce Increases Prices as it Promotes New AI Features) Even if you never use the AI tools, you still have to pay the higher price. That’s because they’ve spent so much money building and running those features—they have to make up for it somehow. Because only a small number of people are paying, companies like OpenAI, Antrophic, Microsoft, and Meta offer AI tools for free—but with limits. And as anyone in tech knows: if something is free, you are the product. People often share very personal thoughts with these tools, sometimes things they wouldn’t even tell friends or family. That lets companies build detailed user profiles, which they can then use to sell ads and try to make money. (ChatGPT is getting ‘memory’ to remember who you are and what you like)

But even that won’t be enough. The investment money in AI is bigger than anything we’ve seen in tech—or maybe in any industry. So now governments are stepping in with contracts. (Introducing OpenAI for Government, Expanding access to Claude for government) These tools will be used for surveillance—not just for their own citizens, but for people in other countries too. There are already reports that the U.S. military has hired employees from companies like Meta and OpenAI. (The Army’s Newest Recruits: Tech Execs From Meta, OpenAI and More) It’s also known that companies like Microsoft and OpenAI are getting government contracts. (OpenAI wins $200m contract with US military for ‘warfighting’) One day, you could use ChatGPT to talk about a sensitive topic, and later find yourself denied a visa or even deported—without ever knowing why.

Since most individual users aren’t paying, the money is coming from big companies. I know some of them are buying bulk licenses from companies like Anthropic and Cursor, and then pushing their developers to use these tools. That might help companies work faster, but employees don’t benefit from this productivity boost—in fact, they may become even more dependent on AI. (Time saved by AI offset by new work created, study suggests) And when the company decides to lay off workers, those same people may have to keep using AI tools on their own and start paying for it out of pocket. Right now, the most advanced AI models cost up to $200 a month. Not long ago, it was just $20. Even at those prices, companies like OpenAI and Anthropic still aren’t profitable—so they might raise prices again. In the end, you could find yourself having to pay a lot of money just to keep doing your job.

Motivation of the Companies

If you want to understand what’s going on in the tech industry right now, you need to look at what motivates these companies. There are two groups: the new players and the existing tech giants.

OpenAI started as a non-profit, but now it’s the face of the AI hype. (The Accidental Consumer Tech Company) It’s trying to break into the ranks of the big tech companies—maybe even become the next Apple. Some former OpenAI employees started Anthropic, but outside of software developers who use Claude Code, not many people even know about it. These companies all have one goal: to become the next big tech.

As for the big tech companies—Microsoft, Google, and Meta—they’re trying to protect their position. Microsoft has added AI features to all its products, but I doubt anyone at Microsoft really believes these features will directly make a lot of money, other than letting them raise prices on existing products. (Microsoft bundles Office AI features into Microsoft 365 and raises prices) Their real focus is on the infrastructure—providing the tools and servers that others use to train and run AI models.

Google is in panic mode. Their entire business depends on search, and search is where they make most of their ad money. Now that AI could change how people search, they’re doing everything they can to keep up—but it’s not going well. (Google defends AI search results after they told us to put glue on pizza)

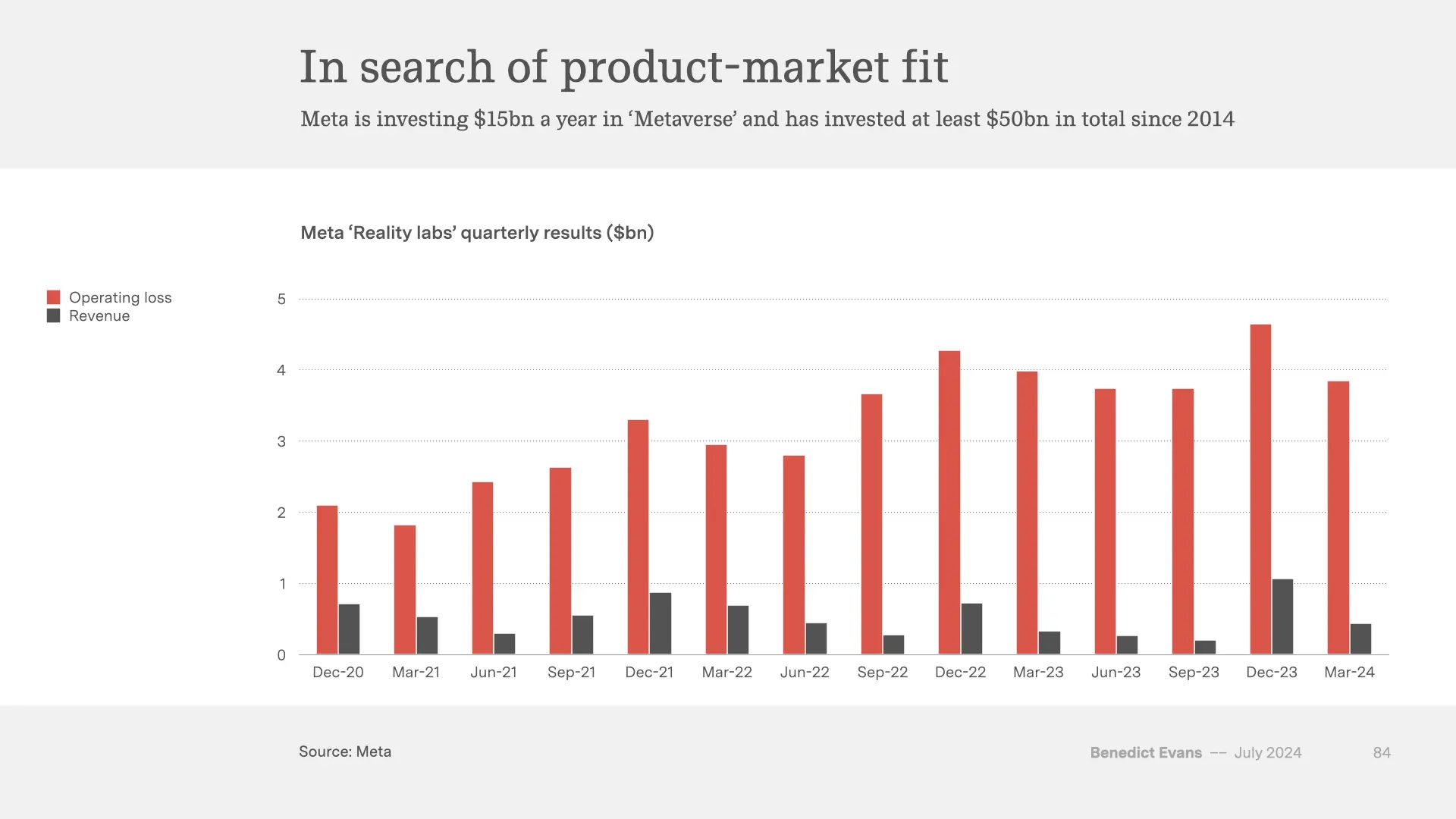

Meta tried to stand out by releasing their models to the public. But people quickly lost interest when they saw the low quality of the models—and the fact that Meta manipulated the results of model tests to make them look better than they actually were. (Meta cheats on Llama 4 benchmark) Mark Zuckerberg seems obsessed with finding the “next big thing.” First, he poured $50 billion into the metaverse, but I don’t know anyone who actually uses it. (The VR winter continues) Now he’s putting AI into every Meta product—WhatsApp, Instagram, Facebook—and calling it “Meta AI.” Lately, he’s even been buying startups and hiring top talent to build a team focused on creating “superintelligence.” (Meta launching AI superintelligence lab with nine-figure pay push, reports say)

Amazon and Apple, meanwhile, are trying to catch up. Maybe they didn’t think the AI wave would be this big. Maybe they didn’t have enough data or talent. Or maybe they just want to take a different path. To me, Apple stands out. They aren’t trying to build a chatbot that knows everything. Instead, they’re focused on using AI as a tool to help people interact with their apps and data in smarter ways. They’re also working on on-device AI and private cloud systems, so your data stays on your device—or when it doesn’t, it’s handled with privacy in mind. (Private Cloud Compute: A new frontier for AI privacy in the cloud) The downside is that Apple’s models aren’t very good yet. But I think they’ll catch up, especially since models from OpenAI and Anthropic have also stopped improving lately. (Recent AI model progress feels mostly like bullshit) What I really respect is that Apple isn’t trying to make money from Apple Intelligence, unlike the others because they’re already making a lot of money by selling you hardware.

What surprises me the most is how quickly people trust these new companies. They share personal thoughts, give access to their devices, and let these apps do all sorts of things. When you install their apps, you’re often giving them permission to control your computer. Some companies are integrating their APIs deep into their systems with just a few lines of code. And these AI companies? They’re not profitable. They don’t have a long history of earning trust. What they do have is a huge hunger for more data to train their models. (“I lost trust”: Why the OpenAI team in charge of safeguarding humanity imploded)

And finally, there’s one thing these companies keep shouting: “AI will replace jobs.” They say it all the time, but economists don’t fully agree. (Nobel Laureate Daron Acemoglu: Don’t Believe the AI Hype) I don’t think the people who built personal computers went around warning that typewriters would lose their jobs. The goal of these AI companies doesn’t seem to be making people’s lives better, but making them jobless.

Product Problem

ChatGPT didn’t start as a real product—it was more like a research tool. But over time, it turned into a way for people to interact with large language models. In some cases, a chat interface makes sense, but most of the time, I don’t think it’s efficient.

If you’re looking something up, you usually type a few keywords and get a list of links. But with a chatbot, you have to write full sentences, and how fast you can type limits how fast you can interact. Then, instead of getting quick, scannable links, you get a big block of text. You read it—but you’re always aware it might be wrong. On a regular search engine, you can judge a source just by looking at the domain of the website, the design of the page or even reading the “About” page. You can make quick decisions with a few clicks. In short, searching and skimming is usually much faster than reading chatbot answers. It’s like someone describing a view in a paragraph vs. just showing you a picture. OpenAI seems to have realized this, because they added a voice interface. (ChatGPT can now see, hear, and speak) That might work for some people, but it’s also slow. You can’t skim a voice reply, and it’s not private—you can’t really talk to it in public. So it’s still not a perfect solution.

Eventually, these companies tried something new: agents. The idea is that instead of just answering questions, the AI would actually do things for you—like a smart assistant. But in order for that to happen, someone first has to define all the functions the agent can handle. Then, when you ask the chatbot for help, it picks the right tool, sends your request to the third party service, and shows you the result. This isn’t new. We’ve had bots that do things for years—the only difference here is that now you ask in a chat box instead of clicking on buttons.

At first, agents sounded exciting, especially for businesses. But again, there’s a catch: someone has to define every tool the agent can use. These models aren’t smart enough to just find a random tool online and figure out how to use it. So Anthropic released something called the Model Context Protocol (MCP), which is basically just a new way of writing an API doc—except this time, it’s for chatbots, not human developers. (Introducing the Model Context Protocol) You hook up all the MCPs you need, and your chatbot uses them to get things done. This is especially useful for people who don’t know how to code. I know many people who already use Zapier to automate their work. With agents, they won’t even need to build a flow—they can just ask the chatbot to do it.

But here’s the real issue: if agents just use third-party tools to do the actual work—like translation, calculation or retriving information—why are these companies spending so much time and money training massive language models? These models take months to train and use more electricity than an entire village. If their only job is to talk to the user and hand off tasks, we don’t need models with all of human knowledge—we just need one that’s good at chatting and picking the right tool. If you need information, just connect to something like Wikipedia’s MCP.

That’s why I think companies like OpenAI, Anthropic, and Meta have a serious product problem. On the other hand, companies like Apple and Google already have an edge. They own the platforms—macOS/iOS, Android—and they can build their own MCPs for default apps like Mail, Calendar, Notes, and so on. This is already something Apple promised but couldn’t ship it yet. (Leaked Apple meeting shows how dire the Siri situation really is) They can also let other developers create MCPs that plug into their systems. That’s a real ecosystem, and it might actually work. (Integrating actions with Siri and Apple Intelligence)

Maybe that’s why Anthropic launched Claude Code. Coding is very structured. You can test the output easily—run it, write tests, see if it works. For software developers, tools like this can boost productivity and save time on things like reading documentation or hunting down bugs. But for most other professions, I don’t think these tools help much. Honestly, I see it more like how Figma made designers more productive compared to Photoshop. Figma didn’t get hyped like this, even though it costs about the same ($20/month). And Figma was valued at $20 billion—after six years of actual product usage and growth. (Adobe to acquire Figma in a deal worth $20 billion)

Not only these companies, now every company is racing to create a product with “AI”. What they really mean is: “you can now use our app through a chat window.” That’s it. They’re marketing it like it’s a huge innovation, but most users don’t even use them. (Customers don’t care about your AI feature)

Feel the AGI

One of the biggest problems with the current AI hype is the people who are driving it. The folks who built these models or launched startups around them act like they’re changing the world. Because they’ve raised millions in funding, they already see themselves as successful—even if their companies don’t make any profit. This is especially true for people who work at OpenAI or used to work there. (Inside the Chaos at OpenAI) Some of them are treated like tech celebrities. There are even groups that act like fan clubs—or even cults—where everything these people say or do is cheered without question. (OpenAI’s anarchist science chief is a techno-spiritual culthead)

With so much attention and praise, it’s no surprise that they’ve started making bold claims. One of the biggest ones is that Artificial General Intelligence (AGI) is just around the corner—and that they’ll reach it using the same methods they used to build today’s large language models. (‘Empire of AI’ author on OpenAI’s cult of AGI and why Sam Altman tried to discredit her book) But there are many studies that show these models can’t really reason or think in the way humans do. (Proof or Bluff? Evaluating LLMs on 2025 USA Math Olympiad) Calling them “intelligent” just because they use language well feels misleading. (The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity)

Still, regular people hear these claims and get pulled in. They believe them without question. It’s becoming harder to explain to people what these models can and can’t do. Many truly believe that these tools can solve any problem. They ignore the warnings, don’t question the answers they get, and treat the tools like magic. (ChatGPT Is Becoming A Religion) In the end, we’re left with a group of people living in a kind of bubble. They believe these tools will change the world—but they’re not really looking at what’s actually happening.

Conclusion

This post turned out longer than I expected, but I wanted to cover every angle to explain why I’m not riding the AI hype train. There are just too many red flags—how the models are built, how they’re used, the impact on people and the planet, the money behind it all, and the motivations of the companies pushing it.

All those companies are throwing everything they’ve got behind AI like it’s destined to be the next big thing. But here’s the thing—none of the past “big things” were pushed like this. They didn’t get flooded with billions in investment before proving themselves. The iPhone, for example, wasn’t even designed to support third-party apps. That only changed because developers wanted in. It grew organically, from real demand. You can’t force a revolution by brute-forcing money into it.

That doesn’t mean these tools are completely useless. I use them myself, sometimes—for proofreading or solving tricky programming problems. But I don’t rely on them. I don’t pay for them. I don’t use them with an account. And I definitely don’t treat them like they’re going to change the world. They’re just tools. If they disappeared tomorrow, it wouldn’t affect how I work or live.

In the end, I see AI for what it is: a powerful but limited tool—not a revolution, not a replacement for human thinking, and definitely not something worth worshipping. It has its place, just like every other piece of tech that came before it. But I won’t buy into the hype. I’ll keep using it when it helps, ignore it when it doesn’t, and question it always. That’s not resistance—it’s just common sense.